Policymakers are responding to worries about mental and physical harm by proposing rules that require frequent disclosures. Those rules assume that reminders will protect people, yet emerging research shows reminders can fail to change beliefs, reduce trust in helpful systems, or even increase confusion and distress for vulnerable users. Understanding when and how reminders influence behavior is essential for designing systems that protect people without undermining the benefits of conversational technology.

This topic matters because the way we communicate the nature of AI will shape future relationships between people and technology, and that in turn affects learning, care, and access. A careful research agenda could reveal methods that preserve clarity while supporting dignity and inclusion. Explore the full article to learn which approaches researchers think deserve urgent study and how better guidance might strengthen human potential rather than weaken it.

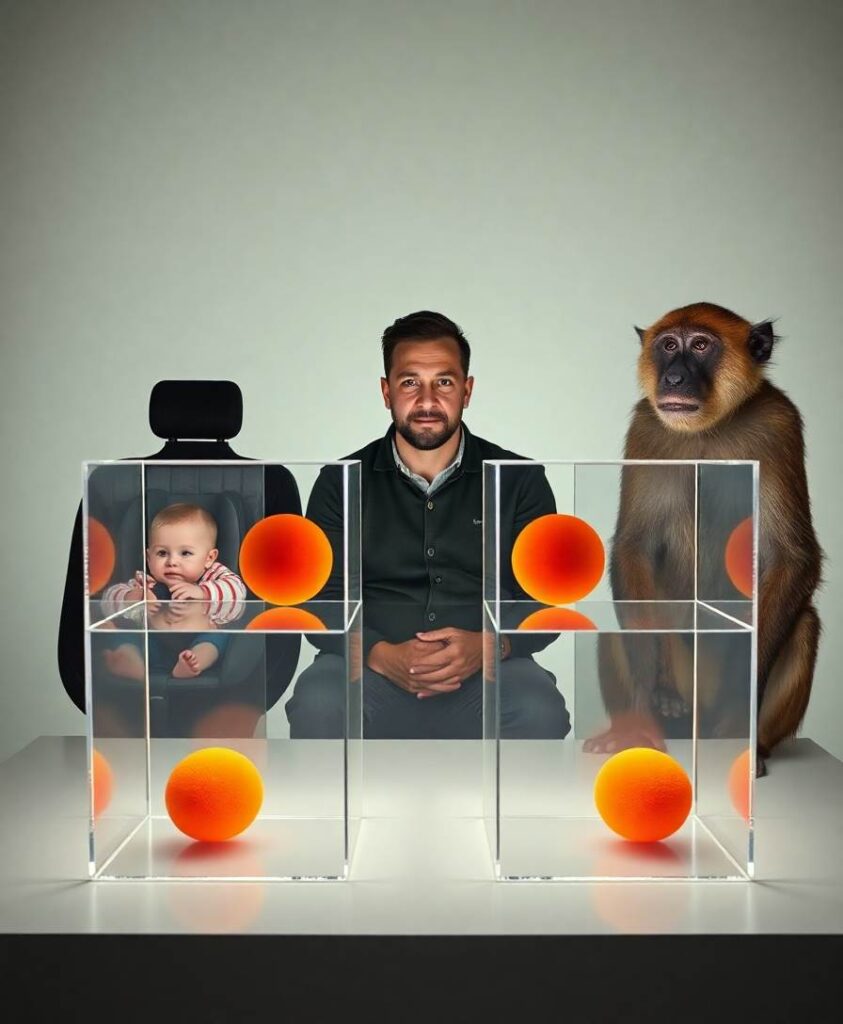

Concerns about mental and physical health harms from chatbots are prompting policies mandating ongoing reminders that chatbots are not human. While well-intended, evidence suggests that reminders may be either ineffective or harmful to users. Discovering how to best remind people that chatbots are not human is a critical research priority.