☰

VOLUME LXXXI | SEP 2023

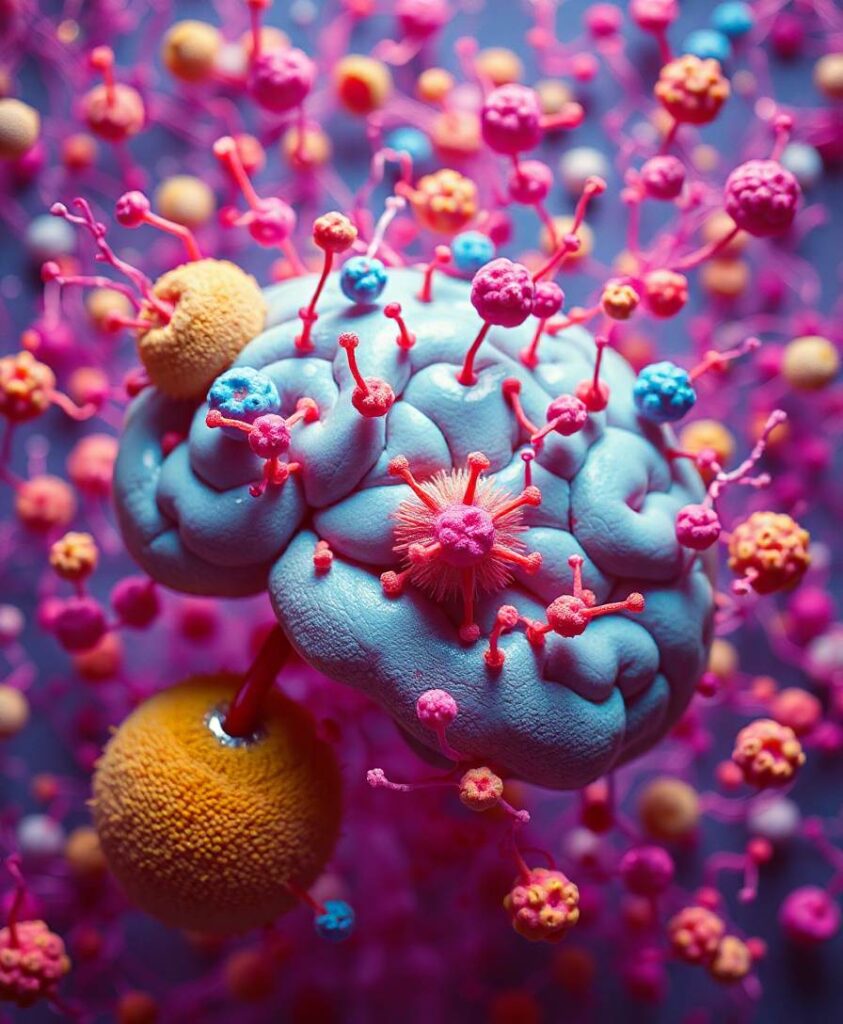

Unraveling the Secrets of Deep Neural Networks

Imagine trying to figure out why your friend made a certain decision based solely on their facial expression. It would be challenging, right? Well, that’s exactly what scientists are faced with when it comes to understanding the inner workings of deep neural networks – those complex algorithms that power many of the AI technologies we use today. In a recent editorial, researchers delve into the issue of interpretability in decision-making processes of deep neural networks. They compare it to unraveling the secrets behind a magician’s tricks – it’s not easy, but with enough persistence and clever techniques, it can be done! By shedding light on the black box nature of these networks, scientists hope to uncover valuable insights that can improve their performance and make them more transparent and trustworthy. It’s like peering behind the curtain during a magic show – once you understand the tricks, you can appreciate the skill and artistry involved. This fascinating research is paving the way for new developments in explainable AI, bridging the gap between human understanding and machine decision-making. To learn more about this exciting field of study, dive into the underlying research.

Amir Khan

Amir is a Pakistani-Canadian neuroengineer in Toronto, developing brain-computer interfaces to enhance learning. As a volunteer author, he shares insights on how technology can amplify cognitive abilities, drawing from his diverse South Asian-Canadian perspective.