Abstract

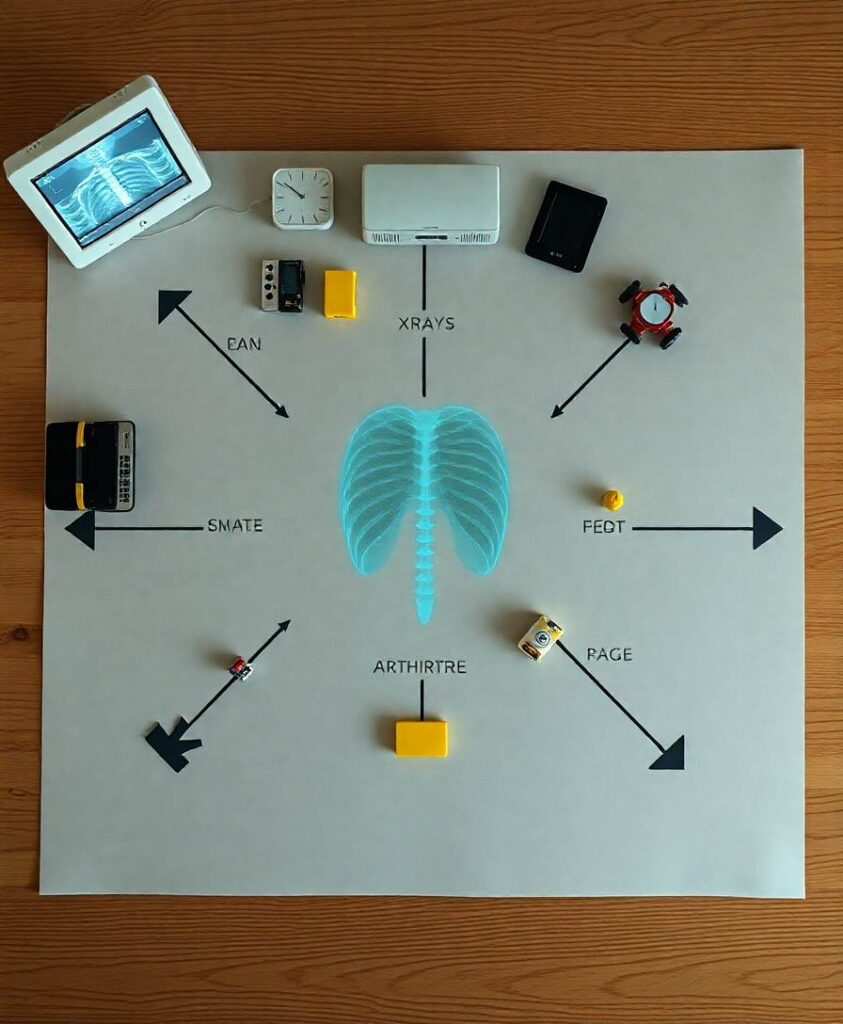

There is a widely held view that visual representations (images) do not depict negation, for example, as expressed by the sentence, “the train is not coming.” The present study focuses on the real-world visual representations of photographs and comic (manga) illustrations and empirically challenges the question of whether humans and machines, that is, modern deep neural networks, can recognize visual representations as expressing negation. By collecting data on the captions humans gave to images and analyzing the occurrences of negation phrases, we show some evidence that humans recognize certain images as expressing negation. Furthermore, based on this finding, we examined whether or not humans and machines can classify novel images as expressing negation. The humans were able to correctly classify images to some extent, as expected from the analysis of the image captions. On the other hand, the machine learning model of image processing was only able to perform this classification at about the chance level, not at the same level of performance as the human. Based on these results, we discuss what makes humans capable of recognizing negation in visual representations, highlighting the role of the background commonsense knowledge that humans can exploit. Comparing human and machine learning performances suggests new ways to understand human cognitive abilities and to build artificial intelligence systems with more human-like abilities to understand logical concepts.